From the previous posts on Linear Regression (using Batch Gradient descent, Stochastic Gradient Descent, Closed form solution), we discussed couple of different ways to estimate the parameter vector in the least square error sense for the given training set. However, how does the least square error criterion work when the training set is corrupted by noise? In this post, let us discuss the case where training set is corrupted by Gaussian noise.

For the training set, the system model is :

,

where,

is the input sequence,

is the output sequence,

is the parameter vector and

is the noise in the observations.

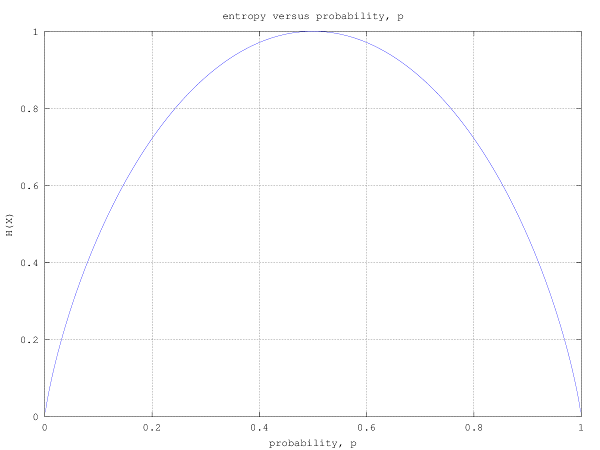

Let us assume that the noise term are independent and identically distributed following a Gaussian probability having mean 0 and variance

.

The probability density function of noise term can be written as,

.

This means that probability of the output sequence given

and parameterised by

is,

.

Let us write the likelihood of , given all the observations of input sequence

and output

as,

.

Given that all the observations are independent, the likelihood of

is,

.

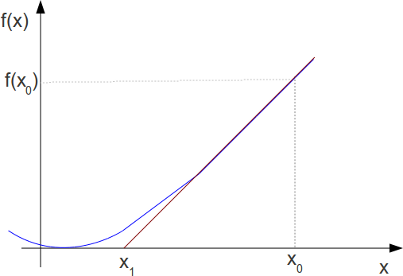

Taking logarithm on both sides, the log-likelihood function is,

.

From the above expression, we can see that maximizing the likelihood function is same as minimizing

Recall: This is same cost function which was minimized in the Least Squares solution.

Summarizing:

a) When the observations are corrupted by independent Gaussian Noise, the least squares solution is the Maximum Likelihood estimate of the parameter vector .

b) The term is not a playing a role in this minimization. However if the noise variance of each observation is different, this needs to get factored in. We will discuss this in another post.

References

CS229 Lecture notes1, Chapter 3 Probabilistic Interpretation, Prof. Andrew Ng