In the previous post on Batch Gradient Descent and Stochastic Gradient Descent, we looked at two iterative methods for finding the parameter vector which minimizes the square of the error between the predicted value

and the actual output

for all

values in the training set.

A closed form solution for finding the parameter vector is possible, and in this post let us explore that. Ofcourse, I thank Prof. Andrew Ng for putting all these material available on public domain (Lecture Notes 1).

Notations

Let’s revisit the notations.

be the number of training set (in our case top 50 articles),

be the input sequence (the page index),

be the output sequence (the page views for each page index)

be the number of features/parameters (=2 for our example).

The value of corresponds to the

training set

The predicted the number of page views for a given page index using a hypothesis defined as :

where,

is the page index,

.

Formulating in matrix notations:

The input sequence is,

of dimension [m x n]

The measured values are,

of dimension [m x 1].

The parameter vector is,

of dimension [n x 1]

The hypothesis term is,

of dimension [m x 1].

From the above,

.

Recall :

Our goal is to find the parameter vector which minimizes the square of the error between the predicted value

and the actual output

for all

values in the training set i.e.

.

From matrix algebra, we know that

.

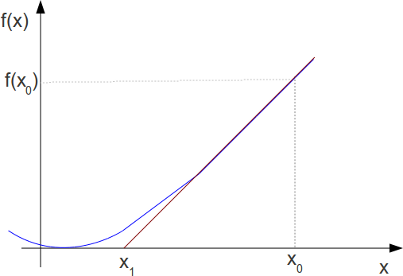

So we can now go about to define the cost function as,

.

To find the value of which minimizes

, we can differentiate

with respect to

.

To find the value of which minimizes

, we set

,

.

Solving,

Note : (Update 7th Dec 2011)

As pointed by Mr. Andre KrishKevich, the above solution is same as the formula for liner least squares fit (linear least squares, least square in wiki)

Matlab/Octave code snippet

clear ; close all; x = [1:50].'; y = [4554 3014 2171 1891 1593 1532 1416 1326 1297 1266 ... 1248 1052 951 936 918 797 743 665 662 652 ... 629 609 596 590 582 547 486 471 462 435 ... 424 403 400 386 386 384 384 383 370 365 ... 360 358 354 347 320 319 318 311 307 290 ].'; m = length(y); % store the number of training examples x = [ ones(m,1) x]; % Add a column of ones to x n = size(x,2); % number of features theta_vec = inv(x'*x)*x'*y;

The computed values are

.

Note :

a)

(Refer: Matrix calculus notes – University of Colorado)

b)

(Refer : Matrix Calculus Wiki)

References

An Application of Supervised Learning – Autonomous Deriving (Video Lecture, Class2)

CS 229 Machine Learning Course Materials

Refer: Matrix calculus notes – University of Colorado

hi

thanks a lot for this article

i am a school student sir ….i m really sorry if i have been spamming ur blog sir…but sir i guess ur blog is amongst the best blogs on education …..

please watch the following videos on you-tube, vote for me and also ask all your near n dear ones to do the same.

http://www.youtube.com/watch?v=wh5cJOdwLHQ

http://www.youtube.com/watch?v=t1fn6_JN-NI&feature=related

If you face any problem in doing so can please go through the bullets below.

You just need to create an account on you-tube using your gmail account

Sign in your account. Stay signed in.

Go to you-tube.com ( in another tab)

Click ‘Create Account’ {your account would be created}

Follow the link

Click the ‘Like’ button

My work is done!

Please do take the pains of liking the videos and ask as many people as you can, it would help me a lot.Please don’t forget to ask other to do the same.

Thank You

m really sorry ..for bothering u ..i hope u’ll understand

@archit: good luck !

You use:

theta_vec = inv(x’*x)*x’*y;

While that is theoretically correct, why not just use:

theta_vec = x \ y;

@Will: Yes, I could have used that. Just that I preferred to use the expanded version.

And for reference, from Mathworks on mldivide \

http://www.mathworks.in/help/techdoc/ref/mldivide.html

“If the equation Ax = b does not have a solution (and A is not a square matrix), x = A\b returns a least squares solution — in other words, a solution that minimizes the length of the vector Ax – b, which is equal to norm(A*x – b).”

Very nice post. Please correct me if I’m wrong, but it looks like you’re simply deriving the linear least square fit formula. It’s probably worth mentioning in the article.

@Andrei: Yup, it turns out to be the closed form for least squares fit. Recall, we are trying to minimize the square of the errors, which is indeed least squares. I will add a note on that… 🙂

Hello, is it the same as the MATLAB function polyfit?

@linkin8834: I have not played with polyfit(), but reading from Mathworks documentation on polyfit(), both seems to be similar.

http://www.mathworks.in/help/techdoc/ref/polyfit.html

“p = polyfit(x,y,n) finds the coefficients of a polynomial p(x) of degree n that fits the data, p(x(i)) to y(i), in a least squares sense.”