Weighted Least Squares and locally weighted linear regression

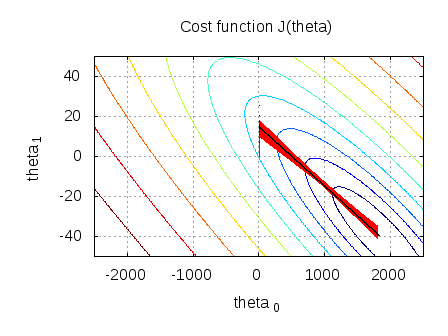

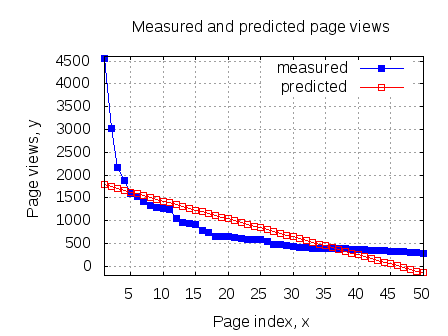

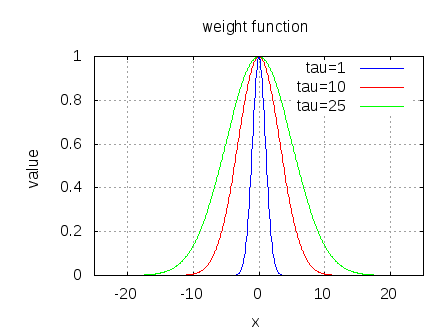

From the post on Closed Form Solution for Linear regression, we computed the parameter vector which minimizes the square of the error between the predicted value and the actual output for all values in the training set. In that model all the values in the training set is given equal importance. Let us consider the case where it is known…